Generative text-to-image models have recent become very popular. Having a bunch of fully captioned images left over from the This JACS Does Not Exist project, I’ve trained a Stable Diffusion checkpoint on that dataset (~60K JACS table-of-contents images with matched paper titles). It seems to work pretty well. Here are some examples of prompts and the resulting images generated:

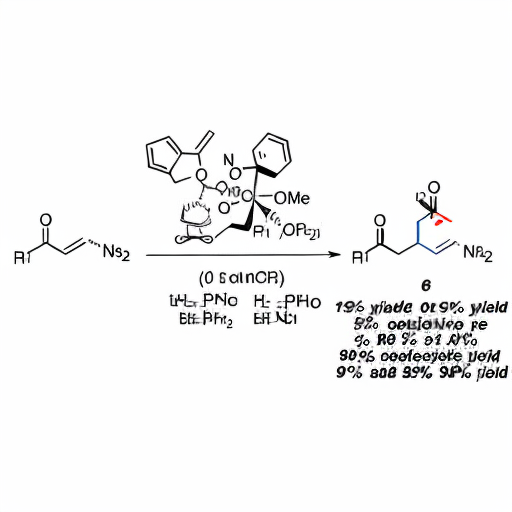

“Development of a Highly Efficient and Selective Catalytic Enantioselective Hydrogenation for Organic Synthesis”

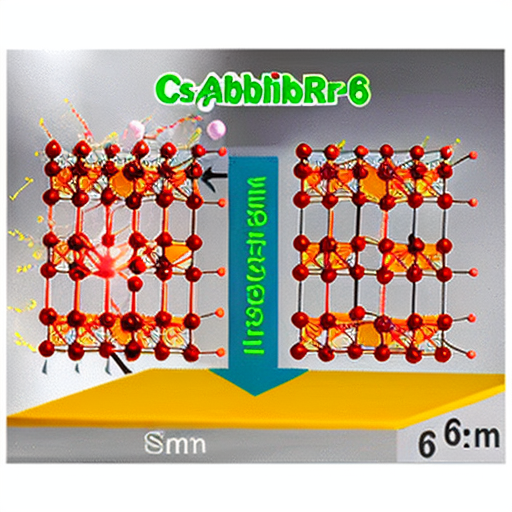

“Lead-free Cs2AgBiBr6 Perovskite Solar Cells with High Efficiency and Stability”

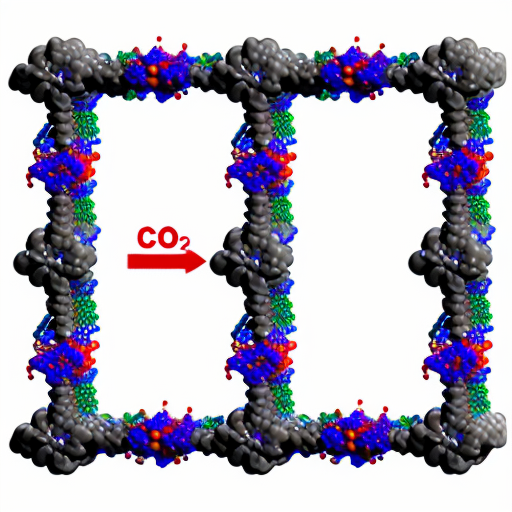

“A Triazine-Based Covalent Organic Framework for High-Efficiency CO2 Capture”

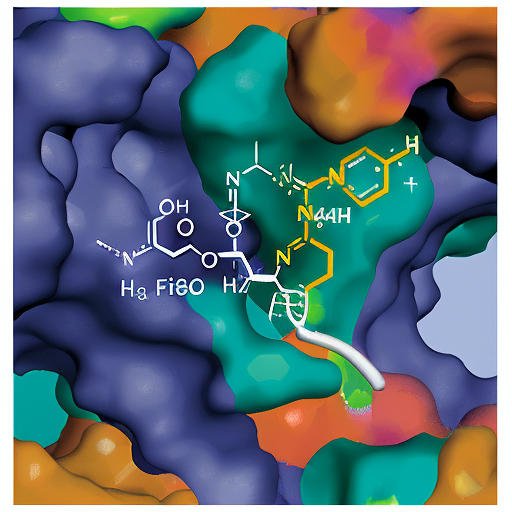

“The Design and Synthesis of a New Family of Small Molecule Inhibitors Targeting the BCL-2 Protein”

Running the model

The fun of generative models is in running it yourself of course. I’ve uploaded a tuned checkpoint that can be freely downloaded. If you’re not familiar with the process, here’s a quick guide:

- Install a Stable Diffusion UI. I’ve been using this one, which has good installation instructions and works on both windows with NVIDIA/AMD GPUs and apple silicon.

- Download the trained chemical diffusion checkpoint hosted here on hugging face - you just need to put the .ckpt file (~2.5GB) in the

\stable-diffusion-webui\models\Stable-diffusionfolder - Run the UI and have fun!

Notes

Taking a page from the larger Stable Diffusion community, negative prompts can clean up the generated images - I’ve used ‘out of frame, lowres, text, error, cropped, worst quality, low quality, jpeg artifacts’.

Different samplers can have a big effect as well. Here’s a grid showing the same prompt with several different samplers:

“The Discovery of a Highly Efficient and Stable Iridium Catalyst for the Oxygen Reduction Reaction in Fuel Cells”

Training

As I’m VRAM-poor (running on 8GB 3060Ti), recent optimizations have made it possible to finetune SD on my home system where a few months ago twice as much memory was needed. I used the training UI this repo which made the process very easy. YMMV - I ended up being able to train with batch sizes of 4, but frequently encountered the dreaded CUDA out of memory error.